Teaching climate change in the context of the climate system: A mixed method study on the development of systems thinking skills in German 7th grade students regarding the climate

Link to the JSE Winter 2023 General Issue Table of Contents

Gorr JSE General March 2023 PDF 0417

Abstract: This paper reports part of a larger study on the development of systems thinking skills in German 7th grade comprehensive school students regarding the climate. Research has shown a fragmented understanding of climate change among students that hardly accounts for the dynamic interrelations in the climate system and may pose a barrier in understanding adaptation and mitigation strategies (Shepardson et al., 2017, 2011, Calmbach 2016). While much is known the impact of short-term interventions on the general system understanding of students, what is lacking to date is 1) a specific intervention on climate system understanding and 2) insights into the process of developing system understanding in students. Helpful insights in this context come from Conceptual Development theories for they allow the development of systemic thinking to be viewed in terms of conceptual expansion or conceptual change. Starting from these desiderates, a teaching-learning sequence was developed based on the SYSDENE model of system competence (Frischknecht et al. 2008). In the sequence young learners systematically link experiences from formal science education with the experiences at three non-formal learning environments. A mixed-methods approach was used to explore the impact of this 3-month sequence on 19 7th grade students. A written pre-/post-test suggested a significant improvement in Climate System Reconstruction for the group (pre-test Median = 6.75 vs. post-test Median = 12.5, Wilcoxon Test: p = .003, r = .82). However, a qualitative analysis of classroom conversations, interviews and concept maps indicated that cognitive development toward a higher level of system thinking was neither continuous nor did every student reach it. Moreover, the SYSDENE model’s Competence Area “Describe System Model” proves critical. Being able to describe the main climate system factors is not sufficient, one also needs to be able to distinct weather from climate and grasp several scientific concepts related to the climate (e.g. greenhouse effect, water cycle, evaporation, reflection) in order to understand climate as a system.

Keywords: Systems thinking, Climate system, climate change education, non-formal learning, cognitive change

Introduction

Humans tend to think in linear and descriptive terms (Vollmer, 1986), which makes it particularly challenging to understand problems arising from complex systems such as climate. In fact, the Earth’s climate is a complex, dynamic system whose properties cannot be fully explained by the properties of its system components, and whose dynamics are subject to nonlinear growth laws. The climate system includes several subsystems, namely the sun, the atmosphere, the hydrosphere, the cryosphere, the geosphere, the biosphere, and the anthroposphere. These different subsystems are in physical and chemical exchange with each other, and thus permanently interact and depend on each other. Moreover, climate is a socio-scientific issue, in which scientific, economic, and ecological aspects are strongly intertwined.

Young people reveal a fragmented understanding of climate change that hardly accounts for the mentioned dynamic interrelations in the climate system (Shepardson et al., 2017; Calmbach et al., 2016; Shepardson et al., 2011). As Shepardson et al. put it: “This lack of understanding regarding feedbacks and the inter-relation between climatic components is a significant stumbling block for understanding not only the causes and effects of climate change, but also the adaptive and mitigation strategies that can be devised” (ibid., p.328). Shepardson et al. conclude that climate change should be taught in the context of the climate system.

In the project of which this article is part, a teaching-learning sequence has been developed to tackle this problem. To understand whether this intervention helps students develop an understanding of the complex dynamic processes in the climate system, the development has been empirically guided and assessed. Since not only system understanding is important for this, but also a change in complex ideas, this study includes theories of conceptual development when looking at the learning process.

Literature Review

Empirical studies have documented young people’s reliance on monocausal thinking when searching for solutions to problems or trying to deal with factual complexity (Sweeney and Sterman, 2007; Assaraf & Orion, 2005). This problem is by no means limited to the concept of climate. As early as 2009, Haugwitz and Sandmann (2009) reported: “International school performance studies such as PISA and TIMSS show considerable deficits in […] interconnected knowledge of German students in the natural sciences” (p. 89). According to the authors, the core of this problem lies in the “complex structure of the natural sciences, which teaching can hardly do justice to” (ibid. p. 89 f.). For instance, German students are said to regularly deal with climate and climate change from the perspective of only one specific discipline at a time—often without relating those insights to the underlying scientific principles (Umwelt im Unterricht, 2015).

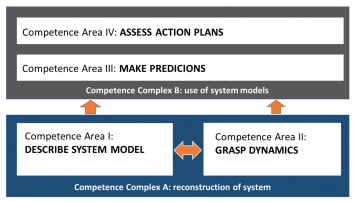

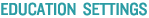

Apart from the specific climate context, teaching systems thinking in the classroom is not a new endeavor. Even if this is not specifically anchored in the German curricula, various models of a general system competence have been proposed (Mehren et al., 2018; Rieß et al., 2015; Frischknecht-Tobler et al., 2008; Assaf & Orion, 2005; Ossimitz, 2000). Although these models differ in terms of the number of competence levels or areas, they all have in common that there are levels or areas that focus more on basic knowledge about systems and an understanding of system organization, and other levels or areas that are more related to knowledge application and/or system-appropriate intention to act. The model of the Swiss SYSDENE group (Frischknecht-Tobler, Nagel & Seybold, 2008, 27f.), for example, proposes the four Competence Areas (I) “Describe system model”, (II) “Grasp dynamics”, (III) “Make predictions”, and (IV) “Assess action plans” (ibid., p. 30), see Table 1. The Competence Areas I and II together form the competence complex “Reconstruction of System” and areas III and IV form the competence complex “Use of System Model”. The existence of these two main competence complexes has been empirically validated by Mehren et al. (2018).

Although several authors (Clausen, 2015, Tschekan, 2009, Siebert, 2007) consider a change of setting, authentic encounters, and a combination of different teaching methods essential for teaching complex relationships, only Clausen’s studies (Clausen, 2015, Clausen & Christian, 2012) are known to incorporate out-of-school learning environments for building systems literacy in students. Other studies employed simulation software (Mischo & Rieß, 2008; Klieme & Maichle, 1994), hands-on models (Bell, 2004; Komorek, 1997) or physical games (Frischknecht-Tobler et al., 2008) at school. Some approaches at least combined analogue and digital materials (Brockmüller, 2019; Feigenspan & Rayder, 2017; Sommer, 2006; Assaraf & Orion, 2005).

Most of the studies mentioned above followed an experimental design measuring the short-term effects of their instructional interventions by means of a pretest/posttest procedure (Brockmüller, 2019; Bräutigam, 2014; Mischo & Rieß, 2008; Hlawatsch et al., 2005). The following overarching findings for teaching and measuring systems competence can be identified from these studies:

- Systems competence, or systems thinking, breaks down into different skill areas that can be viewed as at least additive components, and possibly as stages that build directly on each other (Mehren et al., 2018; Rieß et al., 2015; Bräutigam et al., 2009; Ossimitz, 2000).

- It is possible to promote systems thinking through teaching (Brockmüller, 2019; Bräutigam, 2014; Mischo & Rieß, 2008; Assaraf & Orion, 2005; Hlawatsch, 2005; Maierhofer, 2001; Ossimitz, 2000). Nevertheless, some studies show that only a certain percentage of students adapts a truly systemic understanding (Sommer, 2005; Bell, 2004; Steinberg, 2001; Kliemle & Maichle, 1991).

- A one-time intervention using only one method seems less effective for building broader systems understanding than a combination of texts, videos, simulations and discussions. (Brockmüller, 2019; Mischo & Rieß, 2008).

- Few studies so far have based their empirical investigations on longer interventions of several months (Bollmann & Zuberbühler 2016; Assaf & Orion, 2005).

- The assessment of skills in the different domains or levels should be analogous to the requirement of these domains. Basic, domain-specific knowledge that falls more into a basal competence area can possibly be determined with a simple knowledge test; higher-level or more complex system competence areas, on the other hand, are more likely to be determined with graphical representations or reasoning patterns (Bollmann-Zuberbühler, 2010, p.27).

- The intervention studies described above have almost exclusively developed and tested domain-unspecific system understanding that is not tied to specific natural systems. Exceptions are Brockmüller 2019 and Assaraf and Orion 2005 (geography) and Clausen 2015 (biology). However, general process-related competencies have limitations, because “without content, competencies can neither be developed nor used” (Stanat, 2018, p. 20).

The diagnosis of systems competence or the measurement of its development was done in almost all studies by means of written tests. These included a combination of multiple-choice questions, argument-counterargument tasks, the transformation of complex problems into flow and effect diagrams, and vice versa, and predicting possible outcomes of different scenarios. Very few of these studies examined changes in system competence using concept maps, interviews, or drawings (Clausen & Christian, 2012; Sommer, 2005; Bell, 2004). However, since the system competence models mentioned above assume a change in complex ideas, it would be helpful to consider theories of conceptual development when asking if and how students learned.

As Wilhelm and Schecker (2018) noted, empirical studies in recent decades have repeatedly revealed inconsistency, contradictions, and instability in student conceptions (ibid., p. 50). This is reflected in diSessa’s (2018) heuristic framework, Knowledge in Pieces (hereafter ‘KiP’). According to KiP, there is a profound difference in the knowledge system of a layperson compared to that of an expert. To explain a phenomenon, a layperson draws on loose, intuitive fragments (‘p-prims’) that are only slightly abstracted from everyday experience. An expert, on the other hand, has a stable, differentiated, and domain-specific knowledge system. Achieving this requires that p-prims are incorporated in a meaningful way, which happens reluctantly and often needs support (Amin, Smith & Wiser, 2014). According to diSessa, adequate conceptual understanding only emerges after years of adaptation towards expertise. On the way there, new elements are added to a knowledge system that so far seemed coherent to the learner, which may temporarily lead to increased inconsistency (Hopf & Wilhelm, 2018).

Project Context of the Study

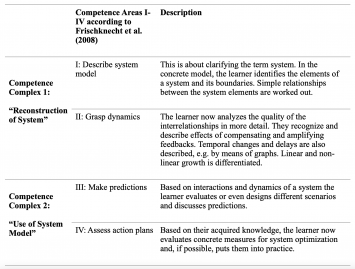

Within the framework of the project a teaching-learning sequence was developed following a Design Based Research (DBR) approach (Figure 1). DBR means that the development has happened in an empirically validated multi-step process (Baumgartner, 2003). The core of this sequence was a systematic linking of the interdisciplinary natural science education of German comprehensive schools with three non-formal learning environments. After a preliminary phase, characterized by an in-depth study of the literature and by the planning of the teaching concept and the research methods, a total of three intervention phases followed.

Phase 1 primarily tested initial learning tools and research methods in the context of a four-day vacation offer for interested young people. From these empirical findings, the didactic means and research methods were adapted, refined and differentiated. In the following Phase 2 they were applied for the first time in the context of interdisciplinary science teaching of a 7th grade at a German comprehensive school. Phase 3 ran as part of the weekly compulsory elective lessons over the school term of 2018/2019 at a different comprehensive school. A total of 17 teaching units took place distributed over three months.

The structure of the sequence was based on the SYSDENE model of System Competence (Figure 2).

Figure 2. The SYSDENE Model of System Competence with its four Competence Areas (CA I-IV) by Frischknecht-Tobler, Nagel & Seybold (2008)

From other models mentioned above, only very limited realistic and achievable skills for 13-year-old students could be derived. For example, Rieß et al.’s model (2015) assumes that at the very basic level, students already master all abstract system-theoretical fundamentals (and therefore terms as „linear“/“nonlinear“ and „feedback“ ) and that at the highest level of systems competence they would be able to independently assess the structure, the validity and the predictive uncertainty of different systems models (ibid., p. 18).

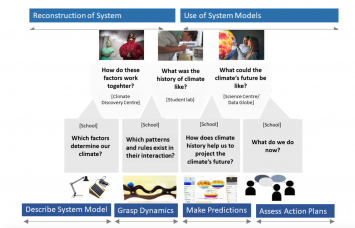

The here described teaching-learning sequence was structured by seven questions that build on each other and which the students pursued in the respective places: at school (medium grey) and outside of school (light grey), see Figure 3.

Figure 3 Teaching-Learning Sequence based on the SYSDENE model. The sequence’s aims and contents are explained further in Appendix 1.

First, the students explored the system elements of climate through experiments at school – exemplified in figure 3 by a model illustrating the angle of incidence of the sun. At the Klimahaus Bremerhaven, a climate discovery center, they then emotionally and physically experienced how these factors interact and form stable climate zones. Back at school, the students used hands-on models to explore the patterns and rules in the interaction of system elements. These include, for example, cycles, chain reactions or, shown in the graphic, tipping points (as for an explanation of the models, see Appendix 1/Day 5). At the MARUM lab at Bremen University, the students then analyzed a drill core sediment to reconstruct climate fluctuations in the Earth’s history. Here they also applied first concepts, for example, the significance of tipping points for the climate history of the Earth. With their knowledge of the interaction of system elements and climate fluctuations, the pupils now modeled the climate using the Monash University Interactive Simple Climate Model (https://sci-web46-v01.ocio.monash.edu/mscm/overview_i18n.html?locale=EN). This model simulates the most important physical processes of the climate system in a simplified way. Through virtual experiments, the importance of individual components of the climate system (e.g. ice, oceans, clouds, CO2) and their interactions can be investigated by learners. The dynamic interaction of climate factors across the Earth and the possible future effects of human intervention could then once again be vividly experienced by the pupils using a 3D animated data globe at the Universum science center Bremen. The final activity was a quiz at school, in which the group that collected the most complex arguments on various statements regarding climate change and mitigation strategies won. Appendix 1 traces the entire sequence planning in the sense of a didactic backward logic (Richter & Komorek, 2019). This planning is oriented towards the subject logic of the contents and focuses on the learning processes that need to take place in order for the students to be able to reconstruct the contents as intended. Appendix 1’s color saturation (left column) marks the Competence Areas of the SYSDENE model CA I “Describe system model” (light gray), CA II “Grasp dynamics” (medium gray) and CA III/IV “Make predictions and assess action plans” (dark gray).

Study Objectives and Research Questions

From the literature review, two research desiderata have become apparent. Firstly, no study is known to the author that has been devoted to the development of systemic thinking in the specific context of climate. Secondly, advocates of the Knowledge-in-Pieces theory postulate that large-scale cognitive changes can only be traced if one also looks at details of learning in small time segments (diSessa, 2018; Campbell, 2012). This acquisition process itself has so far largely remained in the dark when it comes to systems thinking development. Therefore, the author aimed this study at deriving a description of cognitive development patterns towards system competence in the special context of the climate.

To answer the main research question “How do the learners’ conceptual understanding of climate as a system change throughout the sequence?” a few specific sub-questions were addressed, of which the following three are in the focus of this article:

- Does the 3-month intervention render a change in students’ ability to reconstruct the organization and the dynamics of the climate system?

- What patterns in the development of system competence can be traced throughout the sequence?

- To what extent do these developments correspond to the SYSDENE model of system competence?

Methodology

Since the entire study was set in the regular classroom of a comprehensive school and volunteer schools and teachers had to be recruited for the in-depth intervention, this study relied on convenience sampling. All procedures followed were in accordance with the ethical standards of the Lower Saxony State Education Authority, Office for Schools and Education. Informed consent was obtained from all students’ parents for the children being included in the study.

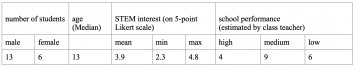

The sample of this third DBR phase consisted of 19 seventh grade students of an integrated comprehensive school who had selected “Natural Science” as their first or second desired course. At the integrated comprehensive school, biology, chemistry, and physics are taught integratively in the subject of natural sciences at secondary level I (corresponding to ISCED level 2). The performance spectrum was heterogeneous, as is typical for this school type, with a total of three pupils with special needs and five pupils with language impairments. Overall, there was a strong expression of medium grades, but with quite a high affinity for STEM subjects, see Table 2.

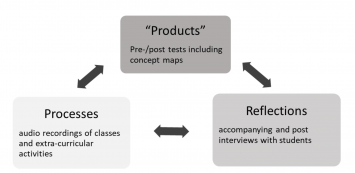

DBR is considered a methodological framework in which the data collection methods are determined by the context and the development goals, so that “all available data sources and research strategies [are used] that contribute to answering the research question” (Döring & Bortz, 2016, p. 73). Therefore, a mix of methods was adapted for the study in that explorative-qualitative methods were supported by statistical data. Thus, this study should be viewed as an embedded type of mixed-methods research with a focus on qualitative data. In total, three perspectives of the development process, namely ‘products’, ‘processes’ and ‘reflections’, were illuminated and different instruments were used for the data collection for each (see Figure 4).

Figure 4 Three perspectives on the development process and the data collection instruments applied for each

The performance of the participating students was assessed by a test one week before the implementation of the sequence and one week after its completion. The intervening process was traced with the help of audio recordings of the conversations in the respective learning environments (see Figure 5). As can be seen in Figure 5’s blue and dark grey rectangles, the sequence was divided into three thematic phases (I, II, and III/IV) for the data analysis to better anchor the development process over time. These thematic phases were oriented towards the SYSDENE model described in the literature review and in the Project Context of the Study chapter.

To complete the picture by means of reflections, accompanying interviews were conducted with selected students at three points during the sequence. A total of six students were selected: two students with low school performance, two with medium school performance and two students with high school performance. Two students of comparable ability levels were interviewed together in order to create a conversational rather than an interrogative atmosphere.

Pre-/post test

For the pre-/post-comparison, a short test was developed that almost exclusively mapped the SYSDENE competence complex “System Reconstruction” and thus the Competence Areas CA I “Describe Models” and CA II “Grasp Dynamics”. Existing tests of domain-specific system understanding were judged to be too difficult to use in the field. They would have exceeded the duration of a lesson by far and the seventh graders would have most likely been demotivated. A valid picture of their abilities could thus hardly have been derived in this way. In favor of a manageable test length for the 13-year-old students, modelling and problem-solving skills depicted in the Competence Areas III and IV were solely traced by student interviews and audio recordings of the class discussions. In the unannounced test, only those aspects were evaluated that are relevant to this sequence, so that a clear area-specific understanding of the climate system could be recorded. The test (see Appendix 3) comprised six questions with two to three sub questions each plus a section on demographics and STEM-interest. Altogether this could be completed in less than 45 minutes.

Relating to CA I “Describe Models”, the students had to define the term “system” as well as the terms “stable” and “unstable” and apply them to climate and weather. They were also asked to reconstruct the climate system using a concept map with ten given terms that had to be connected in a meaningful way. Other tasks required an understanding of more dynamic interactions in the climate (corresponding to CA II “Grasp Dynamics”). In this section, the students had to explain a supposed contradiction: locally very low temperatures despite global warming. Furthermore, they were confronted with different situations that visualized chain reaction, feedback and tipping point. The students had to predict the outcome of these situations, both in the pre-test based on their prior assumptions and in the post-test based on their experience with the analogue models. In a second step, they were asked to apply each situation to processes in the climate system.

Most of the tasks were open-ended and level-unspecific, thus allowed answers at different levels. The competence level is hence not reflected in the task itself, but in the coding instructions for the task. It was decided to include the judgement of a second, independent rater into the evaluation to increase reliability, which is typically limited for open-ended question tests. For this purpose, a scoring matrix was developed, whose wording was adapted on the basis of joint pilot assessments. Finally, the arithmetic mean was calculated for each test from the assessments of both raters. In order to derive performance differences of the group before and after the sequence, a Wilcoxon signed-rank test for dependent, nonparametric samples was calculated using SPSS software. Since six students missed the last class, the post-test could only be collected from 13 of the 19 pupils. Due to the small sample size the data were not normally distributed, hence the decision to use a nonparametric test.

For analyzing the results of test task 3, the concept map, an evaluation method practiced by Clausen and Christian (2012) was adapted. The authors propose an ordinal scaled scoring system for determining the quality of the statements made on a map. The scoring scheme takes the statements’ complexity into account: simple, descriptive statements are scored lower than hierarchical relationships, and these in turn are scored lower than causal relationships. To the resulting total score, the number of system elements mentioned (up to 11) was added. As Appendix 5 shows, the scoring system was adapted to the study’s context. For example, system feedback was also accounted for over and above the aspects highlighted by Clausen and Christian (ibid.). Furthermore, in addition to clearly incorrect statements, which scored zero points, partially correct statements were also considered, e.g. if there was a correct causal relationship, but the term was used vaguely due to the students’ limited prior knowledge.

Audio Recordings and Interviews

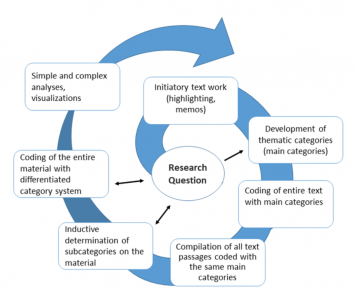

All interviews and audio recordings in class were transcribed using the 2018 to 2020 versions of the MAXQDA software. In order to be able to trace conceptual developments from moment to moment, all transcripts were analyzed employing a content-structuring content analysis according to Kuckartz (2018). Figure 6a shows the process of this type of content analysis, which comprises seven phases – from initiating text work to simpler to more complex analyses of the resulting data material. Characteristic of this procedure are feedbacks and loops, i.e., the development of categories takes place in several top-down and bottom-up steps.

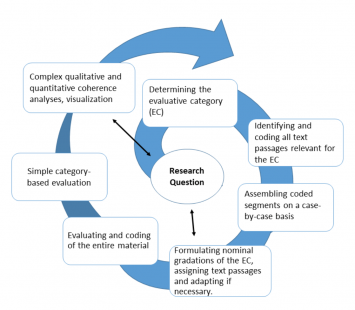

However, research question 1 required not only the identification of topics and argumentation strategies but also an evaluative classification of content; after all, the aim was to depict the development of the students’ system understanding, which implies a gradual unfolding of understanding and skills. For this purpose, another method after Kuckartz (ibid.) was used, the evaluative content analysis (Figure 6b). Categories were formed on the data material whose characteristics were now ordinal and no longer purely thematic (ibid., p. 123ff.).

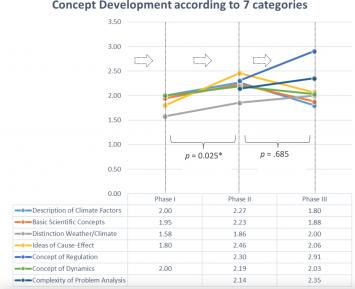

A comprehensive category guide was developed for all three research questions (Appendix 2). On the one hand, the categories were fed deductively from the research questions and the underlying theories of systems competence, but they were also developed inductively directly from the material and finally applied to all transcripts. This approach resulted in a total of seven thematic categories of climate-specific system understanding, namely. “Description of climate factors”, “Basic scientific concepts”, “Weather/climate distinction”, “Idea of cause-effect”, “Concept of regulation”, “Concept of dynamics”, and “Complexity of Problem Analysis”. All relevant student statements on these seven subject areas were then additionally evaluated using evaluative, four-level codes according to their subject-related meaningfulness. These are referred to as Performance Levels 0, 1, 2, and 3 below (see Figure 7 and Appendix 2).

For the coding process, a second coder was thoroughly familiarized with the research questions, theoretical constructs, and category meanings. In doing so, a procedural approach was taken, which is quite common in qualitative content analyzes and which seeks to minimize non-conformities through discussion. The goal was not to present a quantitative inter-coder agreement, but rather to find consensus in clarifying discussions (ibid, p. 105). For this purpose, the entire system of categories belonging to a particular research question was alternately tested jointly and in parallel on part of the material, before being discussed and then discursively developed further. Only then was the entire body of material completely coded again.

Figure 7: Seven thematic categories of climate-specific system understanding and their four performance levels

Results

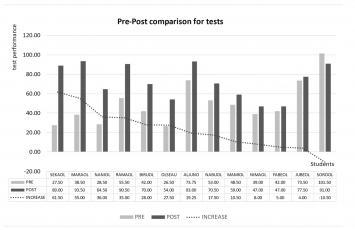

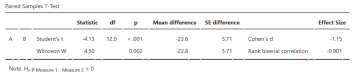

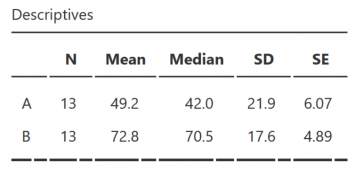

The graph (Figure 8) ranks the test results by performance gain (dark grey dotted line). A Wilcoxon Signed Rank Test (Figures 9a and b) indicates that the results of the pre-test (Median = 42,0; SD = 21.9) and post-test (Median = 70.5; SD = 17.6) differed significantly and with a strong effect (Wilcoxon-Test: p = 0.002, r = -0.901). Thus, three months into the sequence, the group was able to reconstruct the organization and dynamics of the climate system much better than at the beginning.

For an evaluative analysis, the entire development process was now broken down into three phases I, II, and III/IV. Each of these time segments roughly corresponds to one Competence Area (CA) of the SYSDENE system competence model. In Appendix 6, CA I “Describe system model” is highlighted in light blue, CA II “Grasp dynamics” in medium blue, and CA III/IV “Make predictions and evaluate action plans” in dark blue. For each of the three phases, all data from the relevant audio recordings and student interviews were included. Direct comparisons between the three phases are limited because not all categories appeared in comparable frequency during all phases (see Appendix 6). Therefore, a qualitive quotient was calculated for each competence category per phase, which considered relations rather than absolute numbers. For this purpose, the student statements per category of a certain level were each multiplied with a performance-related factor 0, 1, 2, or 3:

- Performance Level (PL0), when a learner could not give any explanation at all, was not scored at all in the numerator.

- Statements belonging to level 1 (PL1) were scored once

- statements belonging to level 2 (PL2) were scored twice

- statements belonging to level 3 (PL3) were scored thrice,

which resulted in this formula:

.![]()

The line diagram (Figure 10) illustrates that two categories of system competence, “Description of climate factors” and “Basic scientific concepts”, developed noticeably in phase II compared to phase I, but decreased in their professional quality during phase III. The categories “Ideas of cause-effect” and “Concept of dynamics” also increased in quality during phase II and declined later but remained above the initial value. Only for “Weather/climate distinction”, a steady increase over all three phases and for “Concept of regulation” and “Complexity of problem analysis” a strong increase between phases II and III could be noted (these categories did not yet play a role in phase I). A Wilcoxon test showed that when taking all categories into account, the total increase between phases I and II is statistically significant (p = 0.025) but not the total drop between phases II and III (p = 0.685). Still, the decline is relevant for single categories, namely situations in which the students were supposed to recall climate system elements, explain basic scientific concepts or basic cause-affect relations.

Using qualitative content analysis of the conversations and the student interviews as well as the maps and written answers of the tests, the initially positive development of “Description of climate factors”, “Basic scientific concepts”, and “Ideas of cause-effect” can be well understood. The students were able to relate most of the experiments (e.g. condensation) to weather or to climate (reflection/absorption, angle of incidence of the sun, currents) and to recognize the climate factors in the climate zones at the climate discovery center. One main pre-conception that had initially occurred in all pupils’ minds was that the greenhouse effect damages the atmosphere and that this only happens due to human impact. The students were able to revise both these ideas surprisingly quickly. All ideas expressed by learners in later transcripts had changed from a ‘hole-in-the-atmosphere’ idea to a ‘cage’ idea, i.e. that an increasingly dense atmosphere no longer allows warmth to escape, which is much closer to the scientific idea (see first student quote below). By the end of the sequence, the majority of students also distinguished the natural greenhouse effect from the anthropogenic one. Although cognitively quite demanding, they were also able to reconstruct the connection between climate and the angle of incidence of the sun (quote 2), to explain condensation, to explain the formation of ocean currents (quote 3), and to apply concepts like reflection in different contexts, as quote 4 illustrates.

- S1_m: […] Earlier the sun passed through the atmosphere, then it hit the earth, then it either went back into space or it hit the earth again from the atmosphere, but now the sun’s rays often don’t leave the atmosphere, and so the earth warms up. (291118_Interview S2_w + S1_m, position 73)

- I: What about the sun in the polar region? S2_m: The sun has a longer way through the atmosphere […] and it heats up a larger area than at the equator. (291118_Interview S1_m + S2_m, position 84)

- S2_w: (…) the blue water was at the bottom because it was cold. And the red one was warm, and that’s why it was on top. […] They mixed and … um, I think that is how sea currents develop. (140319_PostInterview_S2_w and S5_m, position 231–234)

- I: What would happen if there was no more ice on earth? S4_m: Then the earth would heat up more and more, because the white ice can reflect very well. (200319_PostInterview_S4_m, position 92–94)

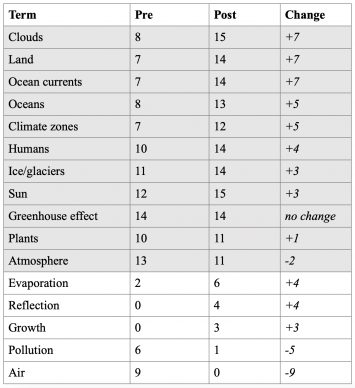

Looking at the concept maps, one sees this positive development confirmed. In their post-maps, considerably more students successfully used critical climate components that are often not part of pupils’ pre-conceptions of climate, such as clouds, land, and ocean currents (cf. Shepardson et al., 2011). A larger number of students also independently brought the terms “reflection” and “evaporation” into play in their post-maps (Table 3). Additionally, students stopped using the vague term “air” and stopped mentioning “pollution”. This not only underlines a more differentiated scientific view of climate but also a decline in initial pre-concepts, in this case, that general environmental pollution is a cause of climate change.

Table 3 Frequencies for single terms, pre- and post-maps comparison. Pre-given terms = grey, additionally used terms = white

Despite the initially rapid progress in the field of CA I, uncertainties concerning the basic climate elements and the application of basic scientific concepts reappeared at a later stage. For instance, when they were supposed to repeat the basic climate components for the Monash climate model two months into the sequence, all five addressed students were only able to recall single elements. As for the greenhouse effect, although the pupils had quickly developed a plausible concept, the question as to how the disappearance of the atmosphere would impact the Earth’s climate proved overwhelming in the student interviews, as the following example illustrates.

I: […] What do you think would happen to the temperatures if we had no atmosphere? // S5_m: Yes hot!! Sahara times ten! // I: If there was no atmosphere? // S2_w: Yes, it would be warm and what else…. uh. // I: Why would it be warmer? // S2_w: Because uh the sun comes in better, I mean, the sun’s rays. And then it all gets warmer. (140319_PostInterview_S2_w+S5_m, pos. 100-105)

Furthermore, in spite of developing a coherent concept for the formation of ocean currents (and despite the phenomenon of wind being explicitly explained several times as an analogy to water currents), some students described trees as the cause of wind by the sequence’s end:

I: What causes wind, S2_w?

S2_w: Eh… oh dear, trees? (140319_PostInterview_S2_w + S5_m, pos. 226)

Competence Area II (system dynamics), was characterized by great heterogeneity right from the beginning. This relates both to the decoding and application of the hands-on models (some laws such as the chain reaction could be derived from the students much more easily) and to the learners themselves: some also succeeded in reconstructing and applying difficult models, while others did not. For example, four students associated the tipping point model (see Appendix 3, marble run) with an earthquake or a change in the Earth’s orbit rather than with an abstract metastable state. Five other students, however, actually saw irreversible changes depicted in the model, and three students explicitly mentioned the term tipping point and specified e.g. “The Earth can compensate for small changes, but not for changes that are too big” (JUBEOL). A similar picture becomes evident for the concept maps. Although the dynamics of the climate were taken into account somewhat more in the post-maps (measured by an increased number of feedback descriptions), this only applied to 45% of the students and the other maps still did not show any dynamic ideas of climate.

As for test question 4, three students interestingly addressed overlaps between the analogue models (see Appendix 3). SOROOL and RAMAOL recognized tipping points in both the domino row and the metastable sink and concluded that in both cases irreversible situations occur. And NABUOL realized that both the coupled pendulums and the metastable sink illustrate a compensation. These students clearly showed that they had abstracted from the concrete models.

When looking at CA IV (resp. the qualitative category “Complexity of problem analysis”) it became evident that the students generally argued in rather complex ways when it came to system-friendly behavior and technological approaches to climate change. However, even though the students questioned things and weighed up pros and cons, their argumentative constructs were sometimes conceptually imprecise or scientifically flawed. For example, one boy argued that electric cars are not better than diesel-powered vehicles in terms of their carbon footprint because their batteries contain radioactive acids (Post-Interview S21_m+S23_m_210918, position 172).

Discussion

By examining the data material, seven meaningful variables of climate system competence development could be generated. Not all of them have so far been specified in the SYSDENE model. The increased number of variables in relation to the SYSDENE model results mainly from the fact that at first, many technical basics had to be acquired to build a basis for understanding and modelling the climate as a system. Here, the extraordinary importance of CA I for the development of climate system competence became apparent. Being able to describe the main climate system factors is obviously not sufficient as students also need to understand basic scientific concepts related to the climate (greenhouse effect, water cycle, evaporation, reflection, absorption, photosynthesis, etc.). What is more, they must be able to differentiate between weather and climate and must have a basic understanding of the role of statistics and models for climate research. We can conclude that a model of general system competence cannot be applied 1:1 to the specific system of climate. The aspect of specific expertise on a system that is required has already been noted in the literature (Mehren et al., 2018, p.686 ; Feigenspan & Rayder, 2017, p.146).

Regarding the SYSDENE model, CA I should take even more space in the model because according to Frischknecht et al. (2008) this competence area already involves the notion of cause-effect relationships. In the data analysis of this work, the CA I category “Ideas of cause-effect” clearly intersects with the CA II category “Concept of dynamics”, since complex and rudimentary dynamic cause-effect relationships such as effect chains, indirect effects, and temporally and spatially shifted effects already appear here.

What is more, as far as CA II is concerned, it seemed to make sense for the analysis of the text material to distinguish between the aspects of dynamics and regulation, because a real understanding of systems cannot only be derived from a knowledge of cycles, feedbacks and non-linear effects (= variable “Concept of Dynamics”), but also from an understanding of the natural laws that govern these processes, namely, that complex natural systems independently strive for stability (= variable “Concept of regulation”).

The fact that the students were generally capable of complex argumentation with regards to climate change mitigation was evident in the relatively strong expression of CA III/IV. This may result from the mainly socio-political perspective under which the students had already dealt with the topic of climate at school prior to the sequence. However, it is precisely in the intertwining of CA I and CA IV that the danger lies as some of the pupils’ argumentations were conceptually imprecise or scientifically flawed despite appearing rather complex. Such imprecise basic understandings may well be stumbling blocks in the planning of adequate adaptation and mitigation strategies (Shepardson et al., 2011). In addition, they could lead students to simply recite normative ideas instead of independently deriving such argumentation from a solid base of knowledge, which may lead to a decreased feeling of personal relevance and action intention (Gorr, 2014).

Precisely because inconsistencies in fundamental knowledge occur in the later stages, while other domains have already developed, these results speak less for a stage model of System Competence and more for a model of permanently interlocking fields of competence, as already indicated by arrows in the SYSDENE model.

Implications for Cognitive Development Theories

Cognitive and emotional processing and linking do not necessarily mean that the linking is didactically intended or scientifically correct. This becomes visible in the qualitative results, according to which meaningful connections seemingly only prevailed after a longer phase of increasing disorganization, an observation supporting Hopf and Wilhelm (2018). Leaps in the students’ argumentations suggested that ad hoc explanations do not necessarily have to be synonymous with internalized and abstracted conceptions. However, they were at least partly constructed spontaneously and contextually. It can thus be assumed that the development of student models towards scientifically accepted conceptions, especially when complex systems are involved, takes a long time, which reinforces the findings of diSessa (2018).

However, in the concept maps, a progressive systematization and reorganization of the original knowledge system could be traced in the sense of a conceptual development. For some students, changes in the priority of concepts, new relationships between concepts, and a replacement of old concepts are observable, which in the sense of the KiP theory already indicates an advanced concept development (ibid.). For other pupils only the first stages of conceptual development are visible: new elements are added, conceptual priorities change, certain concepts are no longer mentioned, and concepts are partly differentiated by naming precise examples. However, there is a lack of establishing new relationships between terms, a general condensation of the conceptual network and a more complex view of mutual influences—in essence, a systemic view. This supports earlier quantitative studies finding that only a certain percentage of students adapted a truly systemic understanding (Sommer, 2005; Bell, 2004; Steinberg, 2001; Kliemle & Maichle, 1991).

Conclusions for theory and teaching – and limitations

A learner with a non-scientific concept will nevertheless experience it as coherent in their everyday life (Amin, Smith & Wiser, 2014). The reason is that she/he will quickly activate p-prims in changing contexts that appear to plausibly explain a phenomenon (diSessa, 2018). It can therefore be assumed that the more small, detached elements are consolidated, the more difficult it becomes for pupils to develop a stable, differentiated and domain-specific knowledge system as they grow older. Thus, it makes particular sense to implement a learning sequence, such as the one presented here, in grade 7 or 8, when fragments and ideas have not yet become consolidated. As the data suggest, it is e.g. not just a matter of explaining to students the interplay of individual climate factors, but learners would also need to be able to access basic scientific concepts such as reflection, absorption, greenhouse effect, evaporation, water cycle etc. and distinguish between climate and weather at this point in their school careers. If these concepts are introduced earlier, learners can draw on them at this point, which helps decrease cognitive load.

However, this alone may not suffice. Structural concepts and explanatory schemes also need to constantly be refreshed in the course of a student’s school career. As Bell (2004) states, “abstract schemata are buried again after some time […] and […] the students’ competence in applying them fades” (ibid., p. 202 f.). For instance, in order to explain complex processes in the climate as a specific system, it may help if students are already somewhat familiar with general systems thinking at this point. Useful ideas in this regard come from Bollmann-Zuberbühler et al. (2010), who developed appropriate system thinking teaching materials for grades 1-9. In support of moving from an exclusively linear way of thinking to a more systemic one, it could also help if teachers employed concept maps instead of mind maps in their lessons as soon as they introduce new topics. This consideration of network-like interrelationships instead of collecting lose factors could certainly be done across disciplines and foster systemic thinking in students early on.

As for cognitive change, it became clear that although some basic concepts such as e.g. that of the greenhouse effect could be changed quickly and sustainably by the students, this was no guarantee for a successful application of these concepts or analogy building to similar concepts. In the situations described above, the children’s everyday experiences repeatedly seem to get in the way of the successful application of newly developed cognitive concepts (Amin, Smith & Wiser, 2014). Thus, the students did not generally use p-prims productively until the end of the sequence. This raises the question of what qualities characterize those learners who develop a stronger understanding of systems and what distinguishes them from learners who develop less. This will be examined in more detail in a separate paper.

Concerning the methods for testing systems thinking, it can be deduced that mere pre-/post-tests (Brockmüller, 2019; Bräutigam, 2014; Mischo and Rieß, 2008; Hlawatsch et al., 2005). assess general system understanding at a certain point in time on the basis of already tested task types. However, they cannot assess how fragmented, domain-specific knowledge integrates into coherent concepts and whether these concepts can be fruitfully and consistently applied in changing situations. This study has begun to address this claim through collecting and analyzing qualitative data. Thus, this work can contribute to a more comprehensive understanding of the learning processes that are characteristic of systems competence development in relation to the climate.

The qualitative content analysis of the students’ statements proved to be indispensable for understanding how individual categories of system competence developed over time. Through this multi-method analysis, the importance of time as a factor in systems competence development became strongly evident. Looking at the overall post-test results, which were only collected one week after the sequence’s end, it seems that the temporal expansion had a consolidating effect on the learners’ concept development when considering Competence Areas I and II. Admittedly though, the test results can only be compared to a limited extent with the statements made by the students in the audio recordings. While in the concept maps the students were asked to assemble given elements in a meaningful way, in the class discussion the students were mostly asked rather openly (“Which elements make up our climate?”). In this respect, it is not entirely clear whether the excellent test results are really due to a consolidation effect or rather caused by a different questioning approach. A completely open question (instruction) also tests memory, whereas the concept maps merely test understanding.

Furthermore, this work has not captured possibly delayed cognitive developments. It would be particularly interesting to see whether and how students interpret future school learning content and everyday experiences based on what they have gained during this teaching-learning sequence. How would they integrate new knowledge pieces and experiences in their extended, or even changed, climate system concepts?

A last word to teachers

In 2022, the University of Oldenburg’s working group on Physics Education developed a teachers’ manual for the here described teaching-learning sequence. The handout will guide teachers through the sequence with supporting materials in terms of subject matter and didactics but will also leave room for personal focus and a teacher’s specific subject expertise. Before the handout is available for use, further development will occur on some hands-on-models that proved difficult for students to understand in the sequence or that, due to their components or outer appearance, tended to promote non-intended ideas in students.

Abbreviations

CA I-IV Competence areas according to the SYSDENE Model of System Competence by Frischknecht-Tobler, Nagel & Seybold (2008)

DBR Design Based Research

KiP Knowledge in Pieces theory as suggested by Andrea diSessa (2018)

PL performance level

p-prims According to diSessa, these are intuitive fragments that are only slightly abstracted from everyday experience (2018)

References

Amin, T.G., Smith, C. & Wiser, M. (2014). Student Conceptions and Conceptual Change: Three Overlapping Phases of Research. In N. Lederman and S. Abell (Eds.) Handbook of Research in Science Education, 2(4), Routledge.

Assaraf, O.B., Orion, N. (2005). Development of System Thinking Skills in the Context of Earth System Education. Journal of Research in Science Teaching, 42(5), 518-560. DOI: 10.1002/TEA.20061.

Bell, T. (2004). Komplexe Systeme und Strukturprinzipien der Selbstregulation im fächerübergreifenden Unterricht. Eine Lernprozessstudie in der Sek. II. Zeitschrift für Didaktik der Naturwissenschaften 10, 163–181.

Baumgartner, E.; Bell, P.; Brophy, S.; Hoadley, C.; Hsi, S. & Joseph, D. (2003). Design-Based Research. An Emerging Paradigm for Educational Inquiry. Educational Researcher, 32(1), 5–8.

Bollmann-Zuberbühler, B. (2010). Systemdenken fördern. Systemtraining und Unterrichtsreihen zum vernetzten Denken 1.-9. Schuljahr. Bern: Schulverlag plus. Available at: http://aleph.unisg.ch/hsgscan/hm00349970.pdf (accessed 9 September 2022).

Bollmann-Zuberbühler, B., Strauss, N.-C.; Kunz, P. & Frischknecht-Tobler, U. (2016). Systemdenken als Schlüsselkompetenz einer Bildung für nachhaltige Entwicklung. Eine explorative Studie zum Transfer in Schule und Unterricht. Beiträge zur Lehrerinnen- und Lehrerbildung, 34 (3).

Bräutigam, J. I. (2014). Systemisches Denken im Kontext einer Bildung für nachhaltige Entwicklung. Konstruktion und Validierung eines Messinstruments zur Evaluation einer Unterrichtseinheit. Dissertation. Available at: https://d-nb.info/1127149997/34 (accessed 17 October 2021).

Brockmüller, S. (2019). Erfassung und Entwicklung von Systemkompetenz. Empirische Befunde zu Kompetenzstruktur und Förderbarkeit durch den Einsatz analoger und digitaler Modelle im Kontext raumwirksamer Mensch-Umwelt-Beziehungen. Dissertation, Heidelberg. Available at https://opus.ph-heidelberg.de/frontdoor/index/index/docId/340 (accessed 10 January 2022).

Calmbach, M., Borgstedt, S., Borchard, I., Thomas, P.M., Flaig, B.B. (2016). Wie ticken Jugendliche 2016? Lebenswelten von Jugendlichen im Alter von 14 bis 17 Jahren in Deutschland. Springer Fachmedien. Available at: https://link.springer.com/book/10.1007/978-3-658-12533-2 (accessed 9 January 2022).

Campbell, M.E. (2012). Modelling the co-development of strategic and conceptual knowledge during mathematical problem solving. University of California, Berkeley. Available at: https://escholarship.org/uc/item/0g57830j (accessed 20 November 2021).

Clausen, S. (2015). Systemdenken in der außerschulischen Umweltbildung. Eine Feldstudie. Münster: Waxmann.

Clausen, S; Christian, A. (2012): Concept Mapping als Messverfahren für den außerschulischen Bereich. Journal für Didaktik der Biowissenschaften 3, 18-31.

diSessa, A.A. (2018). A friendly introduction to “Knowledge in Pieces”. Modeling types of knowledge and their roles in learning. In G. Kaiser G., H. Forgasz, M. Graven, A. Kuzniak, E. Simmt & B. Xu (Eds.), Invited Lectures from the 13th International Congress on Mathematical Education (pp. 65–84). Cham: Springer International Publishing (ICME-13 Monographs). 10.1007/978-3-319-72170-5.

Döring, N. & Bortz, J. & Poeschl-Guenther, S. (2016). Forschungsmethoden und Evaluation in den Sozial- und Humanwissenschaften.

Feigenspan, K. & Rayder, S. (2017). Systeme und systemisches Denken in der Biologie und im Biologieunterricht. In Arndt, H. (Eds.), Systemisches Denken im Fachunterricht. FAU Lehren und Lernen, FAU University Press (pp. 139-176). Available at: https://opus4.kobv.de/opus4-fau/frontdoor/index/index/docId/8609 (accessed 9 January 2022).

Frischknecht-Tobler, U., Nagel, U., Seybold, H. (Eds.) (2008). Systemdenken. Wie Kinder und Jugendliche komplexe Systeme verstehen lernen. Pestalozzianum. ISBN: 3-03755-092-9.

Gorr, C. (2014). Changing Climate, Changing Attitude? Museums & Social Issues 9(2), 94–108.

Haugwitz, M., Sandmann, A. (2009). Kooperatives Concept Mapping in Biologie. Effekte auf den Wissenserwerb und die Behaltensleistung. Zeitschrift für Didaktik der Naturwissenschaften 15.

Hlawatsch, S., Lücken, M., Hansen, K.-H., Fischer, M. & Bayrhuber, H. (2005). Forschungsbericht: System Erde. Schlussbericht. Kiel: Leibniz-Institut für die Pädagogik der Naturwissenschaften (IPN).

Hopf, M., Wilhelm, T. (2018): Conceptual Change – Entwicklung physikalischer Vorstellungen. In H. Schecker, T. Wilhelm, M. Hopf & R. Duit (Eds.), Schülervorstellungen und Physikunterricht. Ein Lehrbuch für Studium, Referendariat und Unterrichtspraxis (24–37). Springer Spektrum. Available at: https://link.springer.com/content/pdf/10.1007%2F978-3-662-57270-2.pdf (accessed 9 January 2022).

Mehren, R., Rempfler, A., Buchholz, J., Hartig, J., & Ulrich-Riedhammer, E. (2018). System competence modelling: Theoretical foundation and empirical validation of a model involving natural, social, and human-environment systems. Journal of Research in Science Teaching, 55(5), 685-711.

Klieme, E., Maichle, U. (1994). Modellbildung und Simulation im Unterricht der Sekundarstufe I: Auswertung von Unterrichtsversuchen mit dem Modellbildungssystem MODUS: Institut für Bildungsforschung. Available at: https://books.google.de/books?id=mu0bmwEACAAJ (accessed 12 May 2022).

Kuckartz, U. (2018). Qualitative Inhaltsanalyze. Methoden, Praxis, Computerunterstützung. Beltz Juventa. ISBN: 978-3-7799-6231-1.

Mischo, C. & Rieß, W. (2008). Förderung systemischen Denkens im Bereich von Ökologie und Nachhaltigkeit. Unterrichtswissenschaft 36 (4), 346–364.

Ossimitz, G. (2000). Entwicklung systemischen Denkens. Theoretische Konzepte und empirische Untersuchungen. Profil-Verlag.

Richter, C. and Komorek, M. (2019, February). Rückwärtsplanung für den Unterricht. Paper presented at the GDCP Conference Vienna, Austria. Available at: https://www.gdcp-ev.de/wp-content/tb2022/TB2022_196_Richter.pdf (accessed 9 September 2022)

Rieß, W., Schuler, S. & Hörsch, C. (2015). Wie lässt sich systemisches Denken vermitteln und fördern? Theoretische Grundlagen und praktische Umsetzung am Beispiel eines Seminars für Lehramtsstudierende. Geographie aktuell und Schule 37 (215), 16–29.

Shepardson, D.P., Roychoudhury, A., & Hirsch, A.S. (Eds.). (2017). Teaching and learning about climate change: A framework for educators. Routledge. https://doi.org/10.4324/9781315629841.

Shepardson, D.P., Niyogi, D., Roychoudhury, A., Hirsch, A. (2011). Conceptualizing climate change in the context of a climate system. Implications for climate and environmental education. Environmental Education Research 18 (3), 323–352. DOI: 10.1080/13504622.2011.622839.

Siebert, H. (2007). Vernetztes Lernen. Systemisch-konstruktivistische Methoden der Bildungsarbeit. Augsburg: ZIEL.

Sommer, C. (2006): Untersuchung der Systemkompetenz von Grundschülern im Bereich Biologie. Dissertation. Christian-Albrechts-Universität zu Kiel. Available at: https://macau.uni-kiel.de/receive/diss_mods_00001652 (accessed 9 January 2022).

Stanat, P. (2018). 15 Jahre kompetenzorientierte Bildungsstandards – eine kritische Reflexion. In B. Jungkamp & M. John-Ohnesorg (Eds.): Können ohne Wissen? Bildungsstandards und Kompetenzorientierung in der Praxis (pp. 15–26). Berlin: Friedrich Ebert Stiftung (Schriftenreihe des Netzwerk Bildung 44.

Steinberg, S. (2001). Die Bedeutung graphischer Repräsentationen für den Umgang mit einem komplexen dynamischen Problem. Eine Trainingsstudie. Diplomarbeit, Technische Universität, Berlin.

Sweeney, L. B. & Sterman, J. D. (2007). Thinking about systems. Student and teacher conceptions of natural and social systems. System Dynamics Review 23 (2-3), 285–311.

Tschekan, K. (2009): Komplexe Lernsituationen im Unterricht. Available at: https://docplayer.org/20949916-Komplexe-lernsituationen-im-unterricht.html (accessed 12 September 2022).

Umwelt im Unterricht (2015): Das Thema Klimawandel in der Schule. Available at: www.umwelt-im-unterricht.de/hintergrund/das-thema-klimawandel-in-der-schule/ (accessed 12 July 2022).

Vollmer G. (1987). Wissenschaft mit Steinzeitgehirnen? In H. v. Ditfurth (Ed.) Mannheimer Forum 1986/87, 8-61.

Wilhelm, T. & Schecker, H. (2018). Strategien für den Umgang mit Schülervorstellungen. In: H. Schecker, T. Wilhelm, M. Hopf & R. Duit (Eds.): Schülervorstellungen und Physikunterricht. Ein Lehrbuch für Studium, Referendariat und Unterrichtspraxis. Springer Spektrum, 38–61.

After completing an MSc in Learning and Visitor Studies in Museums and Galleries at the University of Leicester, UK (Dept. of Museum Studies), Claudia obtained a PhD in the doctoral program "STEM learning in non-formal settings" at the Carl-von-Ossietzky University Oldenburg, Germany. Today, she is a member of the Visitor Research and Evaluation team at the experimenta Science Center in Heilbronn, Germany.

After completing an MSc in Learning and Visitor Studies in Museums and Galleries at the University of Leicester, UK (Dept. of Museum Studies), Claudia obtained a PhD in the doctoral program "STEM learning in non-formal settings" at the Carl-von-Ossietzky University Oldenburg, Germany. Today, she is a member of the Visitor Research and Evaluation team at the experimenta Science Center in Heilbronn, Germany.