Everett JSE Dec 2018 General Issue PDF [2]

Everett Anotated NJARNG Energy Audit Template – Dec 2018 [3]

Abstract: This paper presents best-practices for client-oriented project based learning (PBL) based on building audits used to impart practical sustainable engineering skills. Insights were gained over years of natural reflective practice during which students conducted off-campus building audits for the New Jersey Department of Military and Veterans Affairs. Few studies have explored the use of off-campus building audits with real clients in project based learning courses. A survey of students from recent semesters, plus tangible outcomes, are used to evaluate success. The building audits are valued by students and clients. Best-practices contributing to the success of the building audit course are described which can be used by any PBL practitioner. Critical best-practices pertain to training, deliverables, a report template, a submit-review-resubmit cycle, and regular interaction between undergraduate students, graduate student, faculty, and client staff. In particular, repeated cycles of submitting reports, getting comments, and resubmitting appear to be vital to both student learning and client satisfaction. On average, student teams submitted their major report 3.75 times and received comments 3.9 times.

Keywords: Building Audits, Energy Audits, Project Based Learning, Clinics, Clients.

1 Introduction

The Henry M Rowan College of Engineering at Rowan University (Glassboro, New Jersey, US) has an innovative curriculum that includes Engineering Clinic, a sequence of eight hands-on courses taken by all engineering students, one each semester (Chadupatla et al. 1996, Everett et al. 2004). All Engineering Clinics involve project-based learning (PBL). Freshman Engineering Clinic is focused on engineering measurements and competitive assessment. Sophomore Engineering Clinic is focused on engineering design and has significant communication components. In Junior and Senior Engineering Clinic (JSEC) students work in small teams on open-ended projects under the supervision of one or more professors, often for external clients.

The building audits described in this paper are conducted as JSECs. Building audits are an important tool to achieve sustainability. They are used to identify resource conservation opportunities related to energy, water, and materials. One of the UN sustainable development goals approved in 2015 is “ensure access to affordable, reliable, sustainable and modern energy for all” (UN 2017). Buildings consume between 20 and 40 % of energy in developing countries (EPA 2009, Pérez-Lombard et al. 2008). Reducing building energy consumption improves sustainability. Other UN sustainable development goals concern water and sustainable consumption. Buildings consume significant amounts of water and materials (EPA 2009). In an educational setting, building audits can be used to impart practical sustainable engineering skills, including energy and water data collection and analysis, energy modelling, environmental impact assessment, economic analysis, report writing, and presentation making.

The purpose of this paper is to describe best-practices for client-oriented PBL based on work with numerous student teams completing building audits for the New Jersey Department of Military and Veterans Affairs (NJ DMAVA). The objectives are:

- Describe the Engineering Clinic sequence;

- Review literature related to building audits PBL;

- Present the results of three years of reflective practice conducting building audit clinics; and

- Identify best-practices for client-oriented PBL.

2 Methods

Building audits have been used in client-oriented PBL at Rowan University for over ten years. A team of faculty, graduate students, and client staff have supervised multiple building audits every semester since Fall 2012 for a single client, NJ DMAVA. Though the main focus is on energy consumption, the term ‘building audit’ is used here because the audits can also evaluate water consumption and building maintenance.

The literature was searched for studies on building and energy audits and client-oriented PBL in college and university settings. Engineering, science, and education databases were searched for English language articles from 2000 to the present.

The cumulative improvement achieved by years of natural reflective practice is described and discussed in this paper, identifying best-practices for practitioners interested in client-oriented PBL in general and building audits specifically. Reflective practice has been described as “…the capacity to reflect on action so as to engage in a process of continuous learning” (Schon 1983). The term “natural reflective practice” is used here because the reflective practice was not based on reflective practice literature (Castelli, 2016; Loughran, 2002; Schon, 1983) but rather arose naturally based on the desire of faculty, graduate students, and client staff to use reflection to incrementally improve the building audit clinics.

Three years of natural reflective practice resulted in a stable course format in Fall 2015. To evaluate the effect of the natural reflective practice, the twenty-seven students who completed building audit clinics during Fall 2015, Spring 2016, and Fall 2016 were asked to complete an online questionnaire over winter break 2016-2017. The questionnaire was administered using SurveyMonkey (www.surveymonkey.com). Twenty-one students completed the questionnaire, a 78 % return rate. Questions used Likert or short answer responses. The success of the building audit clinics is analyzed using the percentages of students providing specific answers (Likert) or categories of answers (short answer).

3 Background

This section includes a description of the Engineering Clinic sequence and a review of building/energy audit and client-oriented PBL literature. The Engineering Clinic description is used to provide context for the building audit clinics.

3.1 Rowan University Engineering Clinics

Rowan Engineering graduates complete eight Engineering Clinics, one per semester. All of the clinics use project-based learning (PBL). Laboratory, field, or design projects, and resulting written reports and presentations, provide opportunities for students to learn experientially. The objectives of the Engineering Clinic sequence are to (Everett et al. 2004):

- Create multidisciplinary experiences through collaborative laboratories and coursework;

- Incorporate state-of-the-art technologies throughout the curricula;

- Create continuous opportunities for technical writing and communication; and

- Emphasize hands-on, open-ended problem solving, including undergraduate research.

Freshman Engineering Clinic is focused on engineering measurements (Fall) and competitive assessment (Spring). Students from six engineering disciplines are mixed in sections of approximately twenty-five students. Fall lectures and laboratories teach academic survival skills and first-semester engineering topics, e.g., note taking, cooperative learning, learning styles, significant figures, measurement uncertainty, statistics, report writing, and problem solving. In the Spring semester students work on a semester-long competitive assessment project, the systematic testing of existing products or processes for the purpose of comparison and improvement.

Sophomore Engineering Clinic (SEC) is focused on communication and engineering design (Fall and Spring). For perhaps the first time, students are exposed to realistic design problems best solved by multidisciplinary engineering teams. SEC has significant communication components, both writing and speaking (Riddell et al. 2008, Dahm et al. 2009, Riddell et al. 2010). It is team-taught with communications faculty. Students write about and give oral presentations on the engineering projects they complete during laboratory sessions. Working together, communications and engineering faculty assess student work. SEC fulfills two general education courses required for graduation from Rowan University, College Composition II and Public Speaking.

The hands-on, teamwork-oriented, communications focus of the first two years of Engineering Clinic prepares students for Junior and Senior Engineering Clinic (JSEC). In JSEC students work in small teams on open-ended projects under the supervision of one or more professor. Professors in the College of Engineering are expected to work with at least 2 teams of 3 or 4 students each semester. This counts as one course of a professor’s teaching load.

Each JSEC team works on a unique project, which can be multiple semesters in length. A typical sequence includes: information search and review; development of a clear and concise goal or problem statement; planning; research, design, prototyping, and/or testing activities; and presentation of results. Most projects are funded by industry or governmental agencies. Some projects fund graduate students, post-doctoral students, or even full-time staff who work with student teams. Over 120 projects run in a typical semester. Many are interdisciplinary.

3.2 Project Based Learning Literature – Audits and Clients

A limited number of studies have focused on student building audits in an academic setting. Most do not involve real clients. Off-campus audits are rare. Wierzba et al. (2011) describe a program that involves off-campus audits. The non-profit Colorado College Energy Audit and Retrofit Program teaches students the science and mechanics of energy audits while helping residents in older low-income neighborhoods identify ways to conserve energy and lower utility bills. The article is focused on using audits of a representative sample of homes to estimate neighborhood information and obtain funding, e.g. for neighborhood renewal grant proposals. The audit process includes a blower door test, evaluation of the building envelope, and physical measurement of the home. The Energy-10 model (DOE 1999) is used to model energy consumption and identify recommended actions. The article gives little information on how to supervise student led building audits.

Szolosi (2014) taught eco efficient decision making by having students conduct lighting audits in their residences. It is not clear whether the residences were on or off campus, or both. He found the exercise led students to include an energy audit in a later needs assessment of a recreational facility. Students also gained insight into their own energy consumption and the paybacks associated with energy efficient light bulbs.

A number of studies were limited to on campus audits. Leicht et al. (2015) had students in an architectural engineering course visit two on-campus buildings and assess the feasibility of a future renovation project. Student interest in energy audits increased significantly. Exam results showed students learned directly from the site visits, i.e., they acquired information not covered in class. The authors recommend the use of real-world scenarios that “…allow students to better understand what engineers in industry actually do and to see course principles being enacted in practice”.

Architecture students at the Technical University of Catalonia working on a final project were provided with sufficient data to complete energy audits on existing university buildings (Cantalapiedra et al. 2006). The students analyzed construction drawings, building characteristics, energy consumption, natural lighting, lighting controls, water consumption, and HVAC systems. Students worked individually, but in a cooperative environment. Each student completed a final thesis based on an energy audit. The authors conclude that university energy audits provide an opportunity for students to apply knowledge learned in class to real life. A survey of the students indicated their knowledge of and aptitude towards environmental issues changed from “not sufficient” before the project to “satisfactory” after completion. Finally, the authors state that energy audits can help students understand the connection between their future profession and sustainability.

Wu and Luo (2016) used PBL to teach Building Information Modelling (BIM) in a construction management curriculum. Students participated in an on-campus green building project through roleplay, acting as if they were an engineering team working on the project. While students did not collect data at a field site, they used data from an actual project. Students planned, designed elements related to energy, water, and lighting, and created construction documents. The authors share a number of metrics and tools that can be used to evaluate student work in the PBL environment. Student feedback indicated that PBL and the associated roleplay helped bridge the gap between classroom learning and the real world. The biggest failure identified by students was course scheduling, a result of the open-ended nature of PBL.

Two studies used off-campus data; however, neither included actual site visits for data collection. Park and Park (2012) used an industrial energy audit scenario to develop and assess PBL. Students working in teams completed a virtual pre-audit analysis, walk-through analysis, and recommendations and follow up. The authors provide a methodology for developing appropriate design instructional materials. The method was used by the authors to develop virtual scenarios that capture important aspects of professional practice.

Martínez et al. (2011) studied project-based learning using power supplies and photovoltaic energy. This project did not include field work, i.e., students did not collect data directly. Students worked in teams of four. Students were expected to incorporate feedback provided on intermediate deliverables into final deliverables. Moodle (moodle.org) was used to provide course materials, collect student work, and as a platform for collaboratively writing reports. The authors provide a useful rubric for grading projects. The percentage of student passing the associated courses increased significantly once the virtual projects were incorporated.

Various authors have examined the value of PBL with clients. O’Brien and Sarkis (2014) describe sustainability-oriented PBL with a variety of real-world clients, including, but not limited to, small businesses, non-profits, and municipalities. They provide a 14-week schedule for completing client-oriented PBL. They describe the need for multiple reviews of student work to ensure client-acceptability. They also mention that, in the long run, it may be difficult to find new projects and clients in an institution’s surrounding community.

Other client-based PBL studies were not audit related. Kramer-Simpson et al. (2015) determined that client-based PBL helped students acquire skills they can use in real-world practice, i.e., internships and jobs. Balzotti and Rawlins (2016) used a simulated work environment, but real clients, in a technical communication course for engineering students. While students suffered some confusion and discomfort moving from the traditional classroom to a simulated work environment, student feedback indicate an enhanced understanding of workplace communication and motivation to create good products for the client.

The benefits of PBL identified in the studies cited here include learning about real-word engineering, making connections between classwork and the real-world, and better understanding of course topics. It is rare for building audit PBL to include real-world clients or on-site data collection, especially off-campus. This makes the work presented here of special interest to educators interested in client-oriented PBL and off-campus data collection.

4. Results

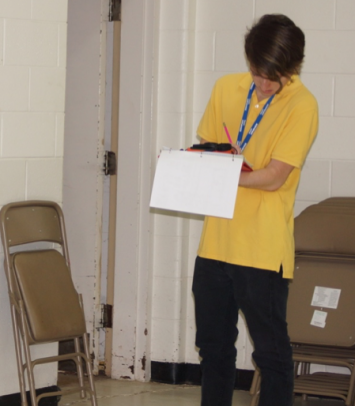

This section begins with a description of the results of natural reflective practice from five years of operation of the Rowan Building Audit Center. During this time, the authors worked with approximately 20 student teams conducting off-site building audits for NJ DMAVA. It ends with the results of the survey of recent students. Photos showing students conducting audits are given throughout this section (Figures 1 – 4).

4.1 Natural Reflective Practice

The New Jersey Department of Military and Veterans Affairs is responsible for over 40 buildings in New Jersey, including armories, training facilities, maintenance facilities, and headquarters. NJ DMAVA is required by the Energy Independence and Security Act of 2007 and DoD Instruction 4170.11 to audit 25 % of its buildings annually (GPO 2007, DoD 2009).

Building audits were first used for pedagogical purposes at Rowan University in SEC in the early 2000’s (Riddell et al. 2004). The experience led to JSECs where farms were audited, funded by the NJ Board of Public Utilities (Gillespie et al. 2008). Ad hocJSEC building audits for NJ DMAVA and other clients began in 2008. The process was regularized in 2012 by a ten-year agreement with NJ DMAVA creating the Rowan Building Audit Center. The Center audits six to ten NJ DMAVA buildings a year using five JSEC teams and one team of summer interns. Since Fall 2012 approximately 100 undergraduate students have spent at least one semester or summer conducting audits. The building audits are an important part of NJ DMAVA efforts to increase the sustainability of its facilities.

Figure 1: Inventorying all energy and water consuming devices

Each building audit is performed by a team of three to five students typically comprised of Civil and Environmental, Electrical and Computer, and Mechanical Engineering undergraduate majors. Students are expected to work approximately six hours a week in a team environment and two to four hours a week individually. Teams meet twice weekly with a Master’s student whose studies are supported by the Center. Each team is also supervised by one of four professors affiliated with the Building Audit Center. Two or three Center professors are assigned to work with students each semester, one per team. They meet with their team at least once a week.

The Center has audited over 2.3 million square feet of space since Fall 2012 and identified significant savings opportunities. To date, over $1.2 million in potential annual energy savings have been identified through strategies such as LED lighting, natural gas conversions, solar PV systems, HVAC controls, and equipment upgrades. The NJ DMAVA Energy Manager uses the Center’s reports to support requests for facility maintenance and improvement. The Center’s recommendations are implemented, e.g., eight facilities have been completely retrofitted with LED lighting. The program was recently recognized as one of the top five Army community partnerships in the nation (Kimmons 2016). The program also won the 2016 Federal Energy Management Program (FEMP) Award for the Program category (EERE 2017). NJ DMAVA staff actively promote the program to other government agencies, a testament to its success.

Natural reflective practice of the Building Audit Center has produced significant improvements. The goal was to optimize student learning outcomes while improving teams’ major reports. Results included: providing a detailed schedule; improving training materials; providing and improving a report template; requiring students to submit reports multiple times over the semester and heavily commenting on submissions to provide guidance; and taking more advantage of the presence of DMAVA staff during class time. Other changes were prompted by the client, e.g., building audits now include an Army Installation Status Report (ISR), ENERGY STAR Sustainable Buildings Checklist, and ENERGY STAR Portfolio Manager Benchmark (US Army 2012, Energy Star 2014, Energy Star 2016).

Figure 2: Determining if a fluorescent light fixture has magnetic or electronic ballast

To complete a high-quality audit in a fifteen-week semester, scheduling is critical. Audits must start as early in the semester as possible. A list of audit sites and contacts is compiled before the semester starts. Students are assigned to audit teams during the first week of the semester and their course/work schedules obtained. Periods with three hours or more of availability are identified to allow time for travel and data collection. Local contacts are then contacted and site visits scheduled for week three.

Building audit training occurs in week two. It starts with the overall process, then focuses on specific audit skills. Students are introduced to the measuring devices, checklists, and data recording methods used during site visits. Training also includes a mock audit and utility bill analyses. Students familiarize themselves with the major report template. Floorplans and utility bills of the assigned buildings can then be examined to prepare for site visits.

The audits include building energy modelling with eQUEST (DOE 2016). The student primarily responsible for eQUEST modelling is selected before the site visit. This student receives different training from the students that collect data on lights and appliances during the site visit. The student modeler completes a mock eQUEST model during the second week of the semester.

Figure 3: Measuring light levels

The site visit is used to interview occupants, inventory energy and water consuming devices, and identify the building envelope and HVAC system. The eQuest modeler focusses on envelope and HVAC. Once that is completed they assist with the inventory. Back on campus, students enter the inventory information into a spreadsheet model and calibrate it based on utility bills and occupant input regarding building operation. Building operation, envelope, and HVAC information are entered into eQUEST.

The spreadsheet and eQUEST models are used to estimate savings associated with a number of building modifications, e.g., lightbulb replacement, changing thermostat set points, occupancy sensors, window replacement, photovoltaic arrays, etc. Potential building modifications are selected based on problems observed during site visits, identified by building occupants, or identified by inspection of building data. Various economic parameters are determined for each action, such as present worth and payback period. The findings are presented in a major report and presentation to the client. In both, financially feasible actions are recommended.

Students interact with NJ DMAVA professional staff throughout the building audit clinic, including an NJ DMAVA-funded full time Rowan employee on-campus at least four days a week and the NJ DMAVA Energy manager. The on-campus employee is present at both weekly meetings, though her primary focus is with students working on other NJ DMAVA projects. The NJ DMAVA Energy Manager meets with students at least once a month.

Figure 4: Identifying drafts with a thermal camera

One of the most important results of the natural reflective practice is a detailed list of deliverables crucial to student learning and evaluation which fosters an environment in which students can create a client-acceptable report in a 15-week period. These deliverables, given below, can be used to improve any PBL course.

- Background Memo – Each newstudent on a JSEC team must familiarize themselves with background material required to understand the project. This could be previous team reports, appropriate websites, training materials, journal articles, books, etc. These materials may be supplied to the student, or the student may need to seek out appropriate information. Each new student submits a memo demonstrating their familiarity with the team project. The memo is due by the end of the second week of the semester. Building audit teams read past reports and training materials created specifically for them.

- Schedule– Each team submits a schedule oftasks to be completed to successfully meet the project goal and objectives. The schedule will align with the due dates in this list, but contains greater detail, i.e., it includes tasks needed to complete the deliverables. The schedule is due by the end of the second week, but will be modified as needed during the semester. When faculty have a great deal of experience with a project topic, students are better served by if a schedule is provided, much like a standard course. Building audit clinic teams are given a detailed schedule known to promote good results. Training occurs during the second week. Site visits are scheduled for the third week. This maximizes the time students have to analyze data, write the major report, and develop a presentation. Site visits conducted too early, i.e., during the second week, result in poor or incomplete data collection due to poor training. Training students on site does not work well and often results in the need for a second site visit to recollect data.

- Analyses– It is common that analyses are conducted on data collected from literature, experiments, field work, or prototypes. Teams make all related results available for the professor’s inspection, preferably in electronic form, e.g., an Excel file. They confer with the professor, or their designate, as needed to ensure data are analyzed properly. Preliminary Analyses, including documentation, are due by mid-semester. Final Analyses, including documentation, are due by the last day of the Finals period. Building audit teams use spreadsheets and eQuest. They are provided with spreadsheet templates.

- Major Report– Teams submit their major report multiple times over the semester, e.g., 25%, 50%, 75%, 90 % and 100% Completion. Multiple submissions must be reflected in their schedule. Reports are graded on Writing (spelling & grammar), Format (provided by the professor), and Content (correctness and completeness). Once an interim report is evaluated and returned, teams use the comments to improve their next submission. Teams meet with their supervisors to discuss comments, as appropriate. If a team chooses to NOT address a comment, they explain why. The importance of multiple report submissions cannot be over emphasized. It is crucial to improving student self-reflection, writing and analytical abilities AND ensuring the delivery of a client-acceptable report at the end of the semester.Failure to turn in reports multiple times generally results in an inferior report. Major reports are due by the last day of finals (unless an earlier date is required by the client). A very detailed report format, heavily commented, has been developed by the Building Audit Center graduate students and professors. The template provides format, describes required content, and addresses common student questions via comments. This template helps students complete a professional report in one semester. Audit teams are strongly encouraged to submit reports every two to three weeks once the site visit and data entry are completed.

- Midterm & Final Presentation – Presentations include, at a minimum: introduction, background, methods used, results, conclusions, and future work recommendations. Presentations should “wow” the audience with content and graphics. Teams are encouraged to prepare for presentations with multiple practice runs. They may request a dry run with their professor. Teams should email a draft presentation at least 2 days before the day of the presentation. The midterm presentation is given in week 7 or 8 of the semester. The Final presentation is given during finals weekto an audience that includes students and faculty who worked on other clinic projects, as well as clients. Requiring two presentations encourages students to reflect on the first when they modify it to create their final presentation. Building audits teams present to the center faculty and graduate students, each other, and NJ DMAVA staff.

- Accomplishments Memo– Each student submits an individual memo describing their accomplishments. The memo includes a justification of their individual AND team’s grade. A preliminary memo is due during week 7 or 8. It should describe what the student has done so far and what they intend to do for the rest of the semester. The final memo is due by the last day of the Finals period.

- Peer Evaluations– Each student submits a Peer Evaluation of all team members, including themselves. The form of the peer evaluation is based on that of Kaufman et al. (1999). This is due twice each semester, within a week after the midterm presentation and by the last day of the Finals period (after the final presentation). When the midterm peer evaluations identify student concerns, the supervising professor meets with the team or individual student, as appropriate.

The building audit clinic has also benefited from the interaction between NJ DMAVA staff, Rowan faculty and Rowan graduate students. One important result is a checklist of audit requirements. It can be used as a starting point for anyone using building audits in a client-oriented PBL course.

- Pictures– Lighting, utility meters, water/mold/mildew issues, windows and doors, exterior, HVAC equipment, anything in need of repair;

- Installation Status Report– Assesses the condition of installation infrastructure, environmental programs, and support services using established Army-wide standards;

- Meters– Meter number & location;

- Inventories(in appendix and Excel file);

- Complete Inventory of lighting and appliances must be included in report appendix;

- Lighting – Location, type of fixture (surface-mount, suspended, flush-mount,…), bulb type, number of bulbs per fixture, number of fixtures of each type, total bulbs, Estimated Annual Electric Consumption;

- Appliances – Location, Type, Make/Model, Wattage, Quantity, Estimated Annual Electric Consumption;

- eQUEST Output;

- Spreadsheet and eQUEST Models;

- Any calculations w/ key assumptions;

- Include savings to investment ratio in energy and water savings summary tables that are used to quickly identify the financial terms of projects;

- Updated ESPM– ENERGY STAR Portfolio Manager is a free tool created by the U.S. Environmental; Protection Agency (USEPA) used to benchmark and track building energy use, water use, and waste and recycling rates;

- Update and Confirm Utility Usage based on utility bills;

- Update “Property Uses and Use Details”, adding appropriate Primary Functions w/ Gross Floor Area; and

- Complete Sustainable Buildings Checklist – Established strategies for maintaining federal buildings to be energy and water efficient, healthy, and sustainable.

4.2 Student Questionnaire

Feedback was obtained from students who had completed building audit clinics in Fall 2015, Spring 2016, or Fall 2016. Ninety-five percent of the respondents believed the preparation for Junior/Senior Engineering Clinics (JSEC) provided by Freshman and Sophomore Engineering Clinic was fair or better. None rated it poor or very poor. The responses were three excellent, nine very good, five good, three fair, and one it ‘did not help or hinder’.

Twelve of the respondents were seniors who had completed three JSECs. One respondent had completed two JSECs, and eight were juniors who had only completed only one. Of the thirteen students completing more than one JSEC, four believed they learned somewhat or much more in their Engineering Audit Clinic compared to other JSECs. Six believed they learned about the same and three somewhat less. None believe they learned much less.

When students were asked to rate their ability to conduct a future building audit, 24 % of the respondents stated ‘excellent’, 48 % stated ‘good’ and 29 % stated OK. No respondent rated their ability as poor or very poor.

When students were asked to describe the best part of the building audit clinic, the most common response was the site visits (seven). Four respondents mentioned the real-world aspect of the project, e.g., one respondent said “it felt like I was an intern on a legitimate project, which made me feel like what I was doing was much more important than just a school class. I enjoyed it because it gave me a taste of professional experience.” Four respondents mentioned the teamwork aspect.

The effectiveness of the building audit training depended greatly on the graduate student providing the training. Respondents trained by one graduate student (Fall 2015 and Spring 2016) rated the training highly: one excellent, four very good, four good, three fair, and one ‘did not help or hinder’. Those trained by a second (Fall 2016) gave much lower ratings: two good, one fair, four poor and one very poor.

Students were asked to describe the most beneficial result of interacting with the two NJ DMAVA staff involved in the building audit clinics. Five respondents had not interacted with the NJ DMAVA the staff. Nine mentioned specific help they received, e.g., help with the report or the eQUEST model. Seven mentioned learning about the real world.

Respondents were very positive about the detailed schedule they received at the start of the semester. Eight believed it helped greatly, seven that it helped somewhat, and three that it ‘did not help or hinder’. None believe it hindered in any way. Three students claimed they never received it.

Respondents were extremely positive about the detailed report format provided at the beginning of the semester. Ten believed it helped greatly, nine that it helped somewhat, and two that it ‘did not help or hinder’. None believe it hindered in any way.

On average, respondents reported their team turned in their major report (drafts and final) 3.75 times on average. They received comments back from their supervising professor and/or graduate student 3.9 times on average. This indicates they rarely received comments from both on a particular draft. They were extremely positive about the importance of the comments–and subsequent re-writes–to learning to complete real-world projects. The responses were 13 very important, six important, and two somewhat important. No respondents found the comments and re-writes to be neutral or un-important in their usefulness.

Students were asked to rate the importance of knowing the client would use the final reports to make actual changes to NJ DMAVA buildings. Six respondents said it was very important, eight important, five somewhat important and two ‘does not matter to me either way. None rated it as unimportant in any way unimportant.

5 Discussion

Based on their responses, the Rowan students believe they learned on average as much in the building audit clinic as other JSECs. All believe they have fair to excellent ability to complete future building audits. The structure of the building audits used by the authors is similar to that reported by Wierzba et al. (2011), though developed independently. The Rowan audit clinics provide students opportunities to learn while gaining real world experience through site visits, client interaction, team work, and professional report and presentation creation. The importance of exposure to real-world experience is also emphasized by other researchers (Wu and Luo 2016, Balzotti and Rawlins 2016, Leicht et al. 2015, Kramer-Simpson et al. 2015, Park and Park 2012, and Cantalapiedra et al. 2006), however, most only simulated it, with on-campus or virtual sites and/or hypothetical clients.

Rowan audit student assessments of the building audit training led the authors to recognize the importance of the graduate student’s experience. The graduate student responsible for the Fall 2015 and Spring 2016 training was in her second year with the Center. She had two to three semesters of experience supervising building audit clinics, plus two more participating in building audit JSECs as an undergraduate, plus two unrelated JSECs. This experience with PBL in general, and specifically with building audits and JSEC, prepared her well to train undergraduate students to perform building audits. The graduate student responsible for the Fall 2016 training had no experience supervising or participating in building audit clinics. She also had limited experience with PBL, and no experience with JSECs. She was dependent on the training materials. This was not sufficient to produce a quality training experience at the start of the semester. Rowan students were able to overcome poor training and create client-acceptable reports because of other strengths of the program, e.g., the schedule and report template, and regular interaction over the entire semester between undergraduates, faculty, NJ DMAVA staff, and the graduate student, especially feedback on multiple report submissions.

In the future:

- Better training materials must be available to the graduate student;

- Graduate student selection criteria should be weighed toward PBL experience, with directly related experience valued highest, e.g., building audits, JSEC, then sustainable engineering or development; and

- Inexperienced graduate students should obtain experience by completing an audit of their own before attempting to train others.

Client involvement in the building audit clinic was important to and appreciated by the Rowan students. O’Brien and Sarkis (2014), Kramer-Simpson et al. (2015), and Balzotti and Rawlins (2016) all point to valuable roles clients can play in PBL. Most Rowan students were motivated by the knowledge that the client would use their reports. Many also appreciated being able to learn directly from the client. The NJ DMAVA Energy Manager uses the data supplied in the student teams’ major reports to justify requests for funds to make facilities more sustainable. He has to present an engineering/financial analysis to get funds to fix a given issue. Thus, each recommendation needs to be complete: assumptions stated; sources included; and financial numbers calculated properly. This motivates students to complete their reports properly. According to O’Brien and Sarkis (2014), finding clients can be difficult over the long run. This is not a problem for the Rowan building audit Clinic, due to its long-term agreement with NJ DMAVA.

Rowan students appreciated the schedule provided at the start of the clinic. Effective scheduling is important if students are to complete building audits within a 14 or 15-week semester. This was also reported by Wu and Luo (2016) and O’Brien and Sarkis (2014).Rowan Building Audit Center experience suggests training should occur during week two and site visits during week three.

The report template and feedback on multiple report submissions are critical to student learning and client satisfaction. The detailed building audit report template provided at the beginning of the semester is very much appreciated by the students. In 2012, students were merely given copies of previous reports. This proved to be inadequate and was soon replaced by a commented report template. The comments in the template anticipate student questions and direct their efforts efficiently. After three years, the template matured into an extremely useful support device. The required submission of multiple drafts, each commented on by a faculty member or graduate student, is also greatly appreciated by the students. O’Brien and Sarkis (2014) recommend a similar course of action. Many technical writers learn to write professional reports in this way. Unfortunately, many students do not experience this iterative feedback process until they enter graduate school or find permanent employment. The PBL building audit clinic provides that experience to undergraduates.

6 Conclusions

The Henry M Rowan College of Engineering curriculum exposes students to project based learning in every semester of their undergraduate education. The increasing complexity of Freshman and Sophomore Engineering Clinic prepares students for the ambiguity and open-ended problems they must deal with to complete Junior and Senior Engineering Clinics. Recent building audit students report their freshman and sophomore clinic prepared them sufficiently well for JSEC. Given that a building audit requires special knowledge and skills, this is probably the best that can be expected from a course sequence that serves six engineering majors.

The long-term relationship with NJ DMAVA formalized by the 10-year Rowan Building Audit Center provided an opportunity to create a better PBL building audit course than possible on an ad hocbasis. Early in the life of the Rowan Building Audit Center, the supervising graduate student often had to rework some or all of each semester’s student reports. The combination of elements described here–scheduling, training, deliverables, checklists, and perhaps most importantly, detailed report template and multiple reviewed submissions–has made that an extremely rare event. Students are satisfied, based on their questionnaire responses. The client is satisfied, as evidenced by recent awards, over $1M in annual savings identified, the clients’ use of student recommendations, and the client’s efforts to convince other government agencies to duplicate the program.

The deliverables list presented here provides a framework to scaffold and assess individual performance in a team-based setting that can be used in any project-based learning course. It is a useful tool for ensuring students learn from project-based experiences and produce client-acceptable reports. In particular, recent building audit students valued working from a major report template and receiving detailed comments on their major reports after each of multiple submissions. They also appreciated being able to interact with clients.

When PBL includes supervision by a graduate student, direct experience in both the project area and the course format is important. An experienced graduate student is better able to provide good training and supervision. For the building audit clinics at Rowan University, the best potential candidates are graduating undergraduate students that participated in building audit JSECs.

Teachers considering the implementation of a building audit project in a sustainability curriculum are encouraged to use the best-practices presented in this paper. The building audits clinics at Rowan are still a work in progress. Improvements occur each semester. Currently, a major focus is providing sufficient training for the future graduate students.

Acknowledgements

This work was supported by the NJ Department of Military and Veterans Affairs and the NJ Army National Guard.

References

Balzotti, J., Rawlins, J. (2016). Client-Based Pedagogy Meets Workplace Simulation: Developing Social Processes in the Arisoph Case Study. IEEE Transactions on Professional Communication. 59 (2), 140-152.

Cantalapiedra, I., Bosch, M., López, F. (2006). Involvement of final architecture diploma projects in the analysis of the UPC buildings energy performance as a way of teaching practical sustainability. Journal of Cleaner Production. 14, 958-962.

Castelli, P. (2016). Reflective leadership review: a framework for improving organisational performance. Journal of Management Development. 35 (2), 217-236.

Chandrupatla, T., Dusseau, R., Schmalzel, J., Slater, S. (1996). Development of multifunctional laboratories in a new engineering school. Proceedings of ASEE Annual Conference, American Society for Engineering Education.

Dahm, K., Riddell, W., Constans, E., Courtney, J., Farrell, S., Harvey, R., Jansson, P. von Lockette, P. (2009). Implementing the Converging-Diverging Model of Design in a Sequence of Sophomore Projects. Advances in Engineering Education, 1 (3).

DOD (2009). Department of Defense Instruction Number 4170.11. Department of Defense, Retrieved from http://dtic.mil/whs/directives/corres/pdf/417011p.pdf.

DOE (2016, December 14). eQUEST: the QUick Energy Simulation Tool. US Department of Energy. Retrieved from http://www.doe2.com/equest.

DOE (1999). Energy Efficiency for Buildings. Directory of Building Energy Software. Washington, D.C.: US Department of Energy, Retrievedfrom http://www.eren.doe.gov/buildings/tools_directory.

EERE (2017, February 27). 2016 Federal Energy and Water Management Award Winners. Office of Energy Efficiency & Renewable Energy. Retrievedfrom http://energy.gov/eere/femp/2016-federal-energy-and-water-management-award-winners.

Energy Star (2014). Sustainable Buildings Checklist. Retrievedfrom http://energy.gov/sites/prod/files/2015/09/f26/sustainable_buildings_checklist.pdf.

Energy Star (2016). Portfolio Manager Quick Start Guide. Retrievedfrom http://www.energystar.gov/sites/default/files/tools/Portfolio Manager Quick Start Guide_0.pdf

EPA (2009). Buildings and their Impact on the Environment: A Statistical Summary. Archive Document. Washington, D.C.: US Environmental Protection Agency. Retrievedfrom http://archive.epa.gov/greenbuilding/web/pdf/gbstats.pdf.

Everett, J., Newell, J., Dahm, K., Kadlowec, J., Sukumaran, B. (2004). Engineering Clinic: Bringing practice back into the engineering curriculum. Engineering Education Conference, University of Wolverhampton, England, UK.

Gillespie, J., Bhatia, K., Jansson, P., Riddell, W. (2008). Rowan University’s Clean Energy Program. Proceedings of the 2008 ASEE Annual Conference,American Society for Engineering Education.

GPO (2007). Energy Independence and Security Act of 2007. Public Law 110–140—DEC. 19, 2007. Washington, D.C.: Government Publishing Office. Retrievedfrom http://www.gpo.gov/fdsys/pkg/PLAW-110publ140/pdf/PLAW-110publ140.pdf.

Kaufman, D. , Fuller, H., Felder, R. (1999). Peer Ratings In Cooperative Learning Teams. Proceedings of the ASEE 1999 Annual Conference, Charlotte, North Carolina. https://peer.asee.org/7880.

Kimmons, S. (2016). Army recognizes community partnerships that saved millions in costs. US Army. Retrievedfrom http://www.army.mil/article/179247.

Kramer-Simpson, E., Newmark, J., Ford, J. (2015). Learning Beyond the Classroom and Textbook: Client Projects’ Role in Helping Students Transition From School to Work.IEEE Transactions on Professional Communication. 58 (1), 106-122.

Leicht, R., Zappe, S., Hochstedt, K., Whelton, M. (2015). Employing an Energy Audit Field Context for Scenario-Based Learning Activities.International Journal of Engineering Education, 31 (4), 1033–1047.

Loughran, J., (2002). Effective Reflective Practice: In Search of Meaning in Learning about Teaching. Journal of Teacher Education. 53 (1), 33-43.

Martínez, F., Herrero, L., de Pablo, S. (2011). Project-Based Learning and Rubrics in the Teaching of Power Supplies and Photovoltaic Electricity. IEEE Transactions on Education, 54 (1), 87-96.

O’Brien, W. Sarkis (2014). The potential of community-based sustainability projects for deep learning initiatives. Journal of Cleaner Production. 62, 48-61

Park, K., Park, S. (2012). Development of professional engineers’ authentic contexts in blended learning environments. British Journal of Educational Technology. 43 (1), E14–E18.

Pérez-Lombard, L., J. Ortiz, and C. Poust (2008). A review on buildings energy consumption information. Energy and Buildings. 40 (3), 394-398.

Riddell, W., Simone, M., Farrell, S., Jansson, P. (2008).Communication in a Project Based Learning Design Course. Proceedings of the ASEE 2008 Annual Conference & Exposition. Pittsburgh, Pennsylvania. Retrieved from https://peer.asee.org/3318.

Riddell, W., Courtney, J., Constans, E., Dahm, K., Harvey, R., von Lockette, P. (2010). Making communication matter: Integrating instruction, projects and assignments to teach writing and design. Advances in Engineering Education. 2 (2), 1-31.

Schon, D. (1983). The Reflective Practitioner: How Professionals Think in Action. Basic Books, New York.

Szolosi, A. (2014). Energy Crunch: Facilitating Students’ Understanding of Eco-Efficiency. Journal of Leisure Studies and Recreation Education. 29 (2), 53-61.

US Army (2012). Installation Status Report Program. Army Regulation 210–14, Washington DC.

UN (2017). Sustainable Development Goals. United Nations. Retrievedfrom http://www.un.org/sustainabledevelopment, accessed, 3/20/2017.

Wierzba, A., Morgenstern, M., Meyer, S., Ruggles, T., Himmelreich, J. (2011). A study to optimize the potential impact of residential building energy audits. Energy Efficiency. 4 (4), 587–597

Wu, W., Luo, Y. (2016). Pedagogy and assessment of student learning in BIM and sustainable design and construction. Journal of Information Technology in Construction. 21, 218-233.